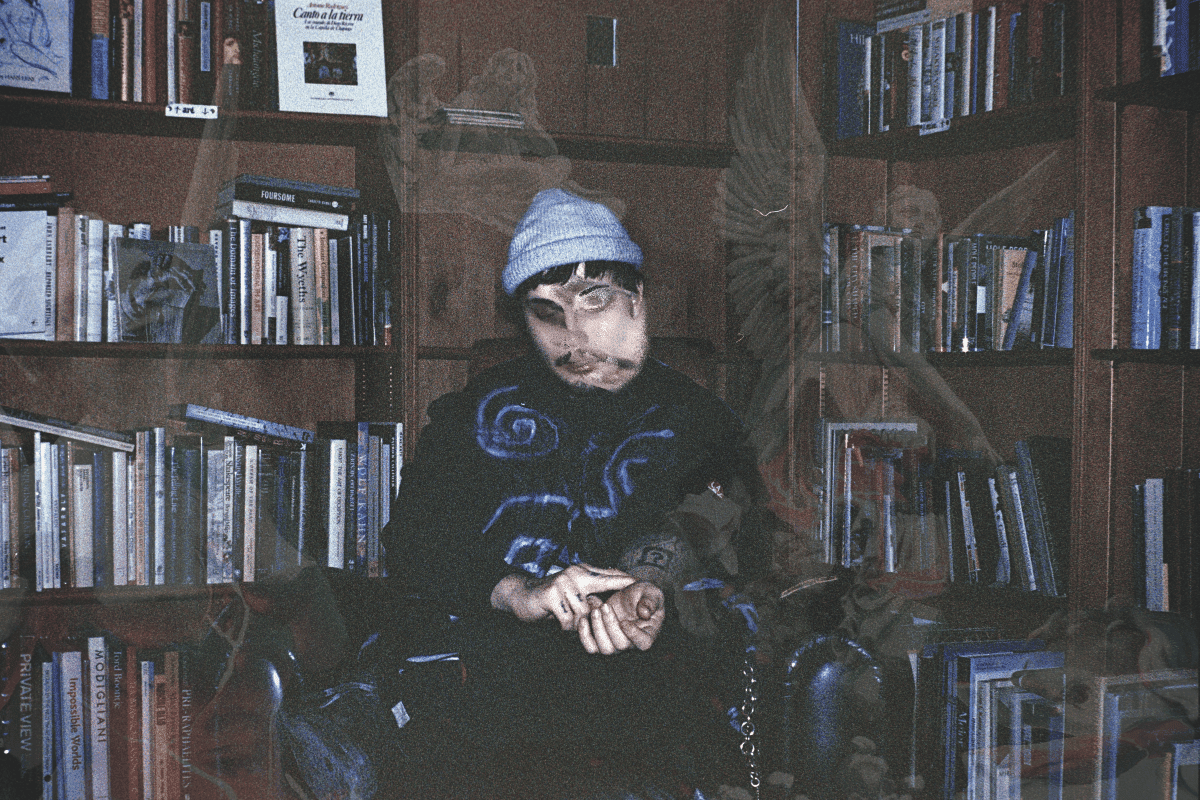

Alberta, Canada producer Little Snake, real name Gino Serpentini, released his new album A Fragmented Love Story, Written By The Infinite Helix Architect this past Friday. Out on Brainfeeder, it features the likes of likes of Amon Tobin, Shrimpnose and Flying Lotus twice. The project is a complex, zany and at times just strange, like you are listening to what Emmett “Doc” Brown from Back To The Future would come up with in his experiments. The tracks zig and then they zag, taking you in all sorts of unexpected directions. Sounds seem to collapse into each other at random, but it still works. If you want to listen to something that is well outside of the norm, this is for you.

Serpentini describes the album as “intended to be a signal to those who have experienced the consciousness of a dualistic pattern they may find themselves in.”

This amalgamation of sounds was created without too many frills, actually on an old 2008 Macbook pro. We wanted to find out how he did it and he explained in a new How It Was Made feature. This one is a bit different without any photos from Little Snake, just words, but he breaks down the process, journey and more that this album went on.

Pick up your copy of A Fragmented Love Story, Written By The Infinite Helix Architect out now on Brainfeeder.

Words By Little Snake

In its essence, this entire project was working from absolute rock bottom up. I really made every effort I could with the little I had in order to make it not only the most fitting I could possibly get within the timbre of the album and the individual tracks, but as conceptually advanced as I could in the production techniques themselves.

This all really started several years back on my second hand used 2008 Macbook Pro. I had just switched over my hard drive to the exact same model computer due to my entire screen being shattered and almost indecipherable, and as soon as I got the little boost forward to make production a little easier, it started going up from there. Don't get me wrong, at the time of purchase, the replacement laptop was still about 10 or so years old. A lot of the time, the headphone jack would not work and this is mainly the only thing I was using as output.

I pretty much wrote the entire body of it on a wired pair of Apple earbuds and mixed the tracks down when I could plug it into my television, which happened to be even older. It was about halfway through the writing process when I started to reference the tracks at studios belonging to those around me, and it became heart wrenchingly audible that I just didn't have the skill to make anything well off of any sort of speaker I had around me.

Throughout this time, my career in general started picking up a bit more and I was able to start funding the sonic capabilities that the album direly needed. I won't lie, most of the reason I was using what I had at the time was because I really couldn't afford anything else. It was a tough time, but as I started to capitalize the best I could off of what I had, I started gaining access to resources that aided immensely. By the tail end of using that laptop, I had really learned to use visual spectrums within my DAW to my advantage and compare it to the systems I had for reference, be it at a show I was playing or another studio I was at.

In all the right timing, I was able to afford a private treated studio space as well as a much more powerful desktop Mac. I had gotten the first pair of speakers and a subwoofer that I could get my hands on and began mixing the absolute hell out of it. It was brutal, probably psychotic even. I became so meticulously detailed with how I wanted it to sound that I ended up making a lot of it sterile and had to add color back into the mix. All in all, I had gotten it to a professional enough quality and I was even able to start implementing experimental palettes within the mix as well as a general theme of this sort of “classic rock” acoustic quality I had always dreamed of pairing with music like this.

Throughout this whole process, I was strictly working within the box in Ableton. On the first laptop, I worked in Ableton 8 to the best of my ability. It really didn't have a lot of features Ableton has today, so if there was some sort of production technique I wanted to learn, I had to find some loophole that would fit the software version. And because I was unable to conventionally achieve a lot of modern practices, I ended up working in very experimental ways in order to create any sound at all.

One specific technique I used, which was the most prominent sound in the album, was actually a result in me accidentally breaking the program. I had learned through a friend that a lot of time based effects have a lot of sound artifacts long after they are inaudible, simply brought up by compressing them to the furthest extent and limiting them. I also at this time learned you could create time based effects out of duplicating certain EQ's several dozen times. I experimented with joining these two forces together for a while and ended up creating this insanely CPU intensive FX chain that creates some extremely otherworldly sounds, I haven't left it out of a session since I created it. Every track on the album benefits from it and it is a shining example on why putting yourself in a creative box breeds innovation.

Within the last year of finishing it, I upgraded to Live 9, 10, and recently 11. They all have amazing groundbreaking utilities within them, but I can only be as thankful for them as I am for knowing this program inside and out due to extreme lack.

Although no hardware was used in the entire LP (Aside from maybe some instrumentation and synths from the collaborators), I did use a handful of external VSTs. Most notably, my first ever purchased plugin is by a company entitled “Zynaptic.” Their plugin "Morph 2" was shown to me by a friend briefly, and although I didn't know him well, he could not stress enough that I would find it interesting. So, sure enough, I got home and looked into it and purchased it on the spot. It's… strange. I'm not entirely sure how it works, however I believe it uses a technique called bin shifting to morph any two sounds together in many different ways. This is the driving force behind a lot of the “talking bass lines” or weirdly vocal formant based textures. It's an insane plugin, go check it out. I am astounded it's not as big as say something like serum. It's basically a vocoder but you can feed it any sound as an oscillator.

Amongst that one, my dear friend and collaborator on the album SABROI was just starting to become super proficient in Max For Live. He had been creating these wild DIY plugins that were so useful and specific to my sound design. A few examples are his console emulator “Console,” his resonator plugin "PDelay" and my favorite, "Lossy.AXMD." That last one essentially replicated bit reduction and audio quality loss, which is a sound I was already emulating using generation loss / by exporting a sound 20 times in an even lower bit depth than before. Sabroi is a genius, and has just recently started to release his plugins publicly. Go check him out for sure.

In terms of a lot of the technical theory, it was the most ambitious project to date. I studied Curtis Roads, inventor of granular synthesis theory, and swore by his work. There was rarely a sample in the project, and a lot of it was experimental both technique and theory, even accidental. There were so many times I had the Ableton quantization grid turned off and was arranging sounds grain by grain, millisecond by millisecond. It was draining, but tenfold rewarding.